When Executives Ask “What Should We Do With AI?”: A Practical Path to High-Impact AI Agents

When I’m working with stakeholders in our AI Practice at geniant, I often end up translating “we want AI agents” into the business problem underneath it. Executives don’t know what they don’t know, but they’re usually trying to answer one of three things:

Where can we apply AI across our business functions where it will materially matter?

How do we move our key metrics with AI/agentic flows in product X?

We want to redesign how work gets done with AI. Where do we start?

What’s the answer? The strongest ROI consistently comes from redesigning the work itself, new flows, new agent responsibilities, and often new human roles, rather than bolting AI onto legacy processes and calling it “transformation.”

But different organizations are at different stages in their AI journey, so all the options are valid in different situations. Let’s take a look.

Relatable to Stakeholders: AI Agents Are “Jobs,” Not Features

I’ve found the fastest way to align a cross-functional group is to start with a simple framing:

AI agents are best understood as “jobs” that can plug into workflows.

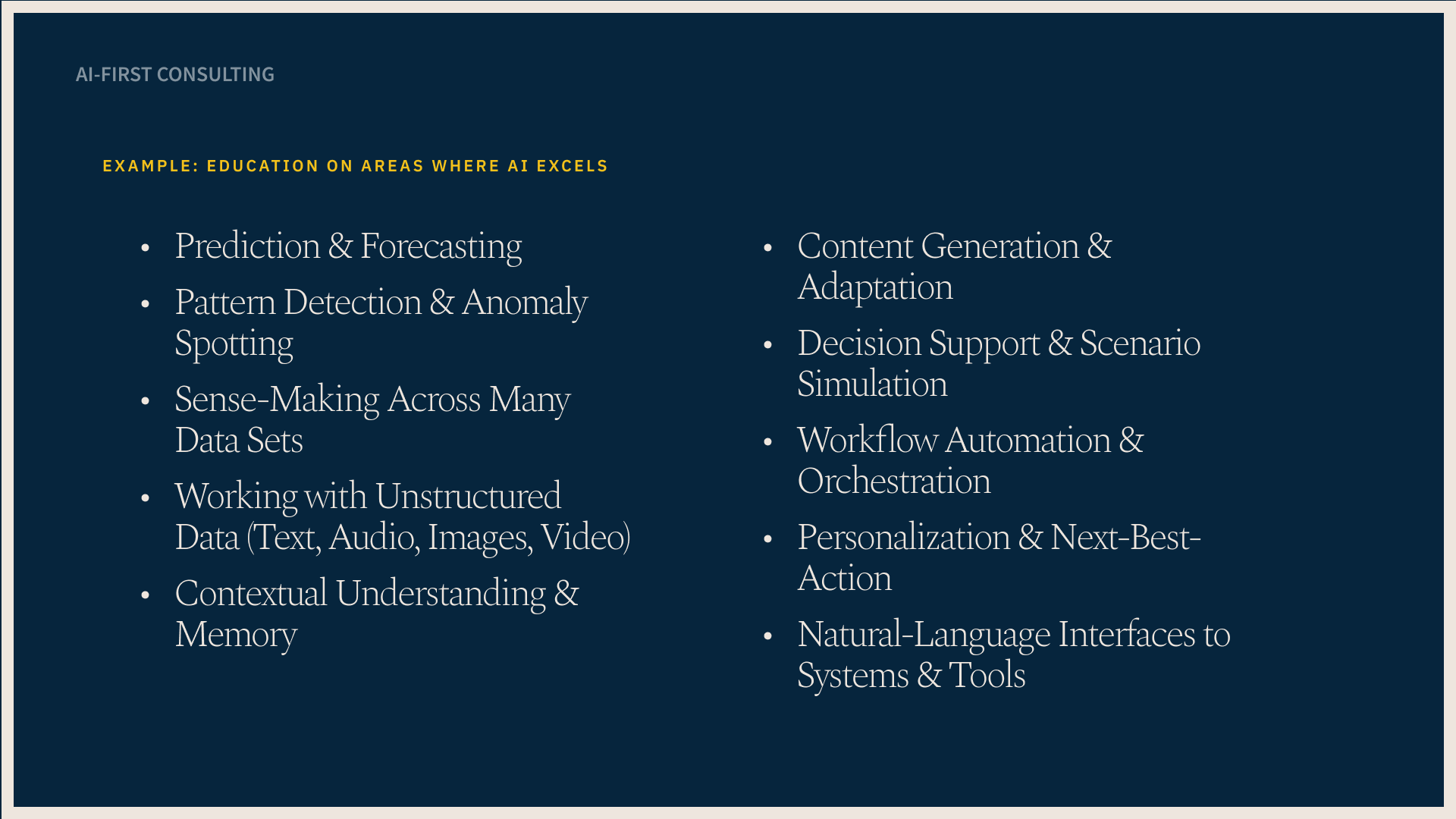

Here are the “jobs” I use most often (because they map cleanly to enterprise work):

Prediction & forecasting (what’s likely next: churn, risk, demand, delinquency)

Pattern detection & anomaly spotting (what looks off: fraud, errors, emerging issues)

Sense-making across systems (summarize and reconcile across tools, tickets, notes, policies)

Decision support & scenario simulation (recommend actions with tradeoffs and confidence)

Generation & explanation (draft, explain, translate, personalize, structure)

Orchestration (move work across steps/systems; route, escalate, track, follow up)

Once stakeholders share this vocabulary, ideation becomes less “AI magic” and more “where in the workflow does this job remove friction or reduce risk?”

Business Functions & ROI

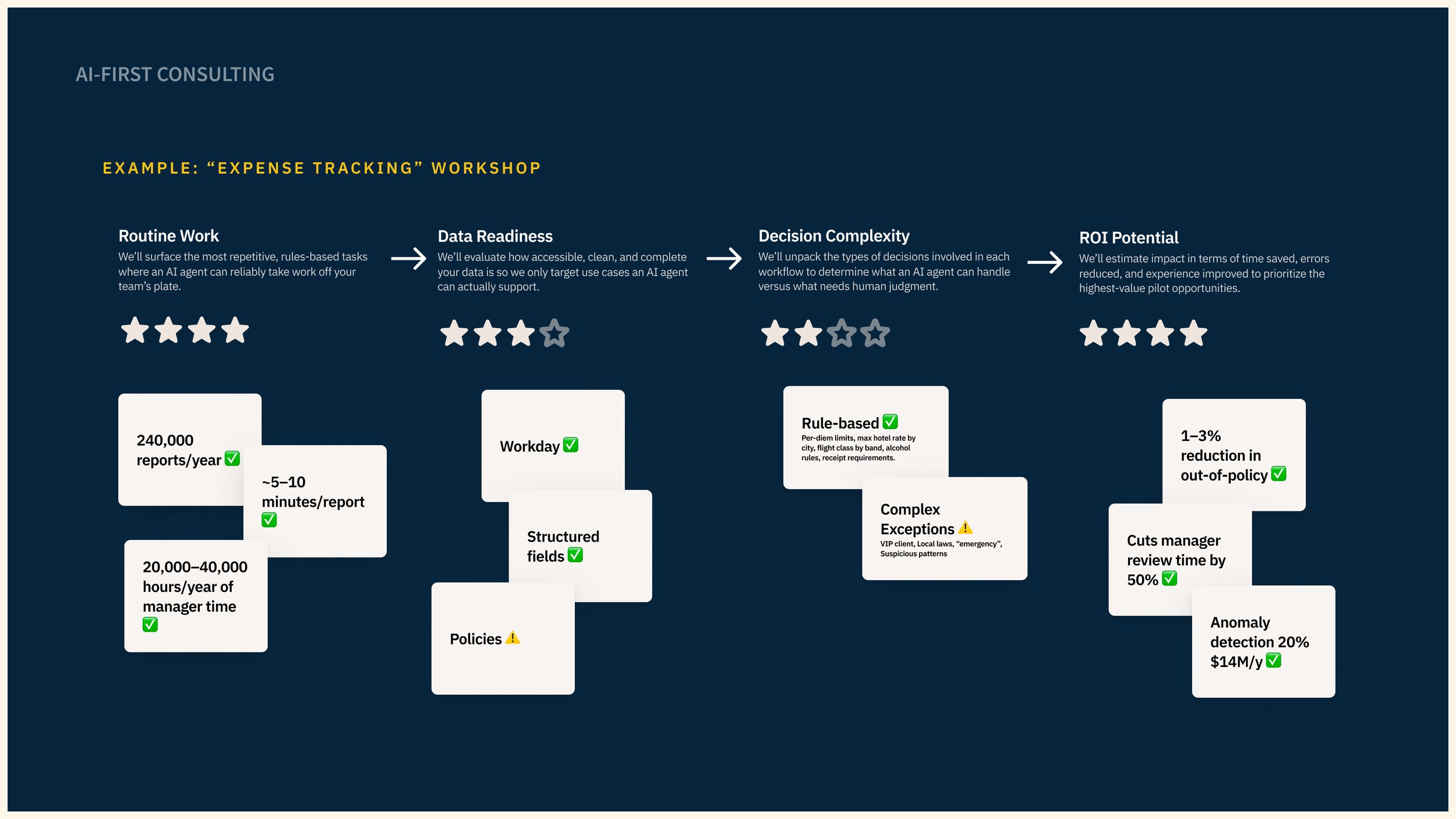

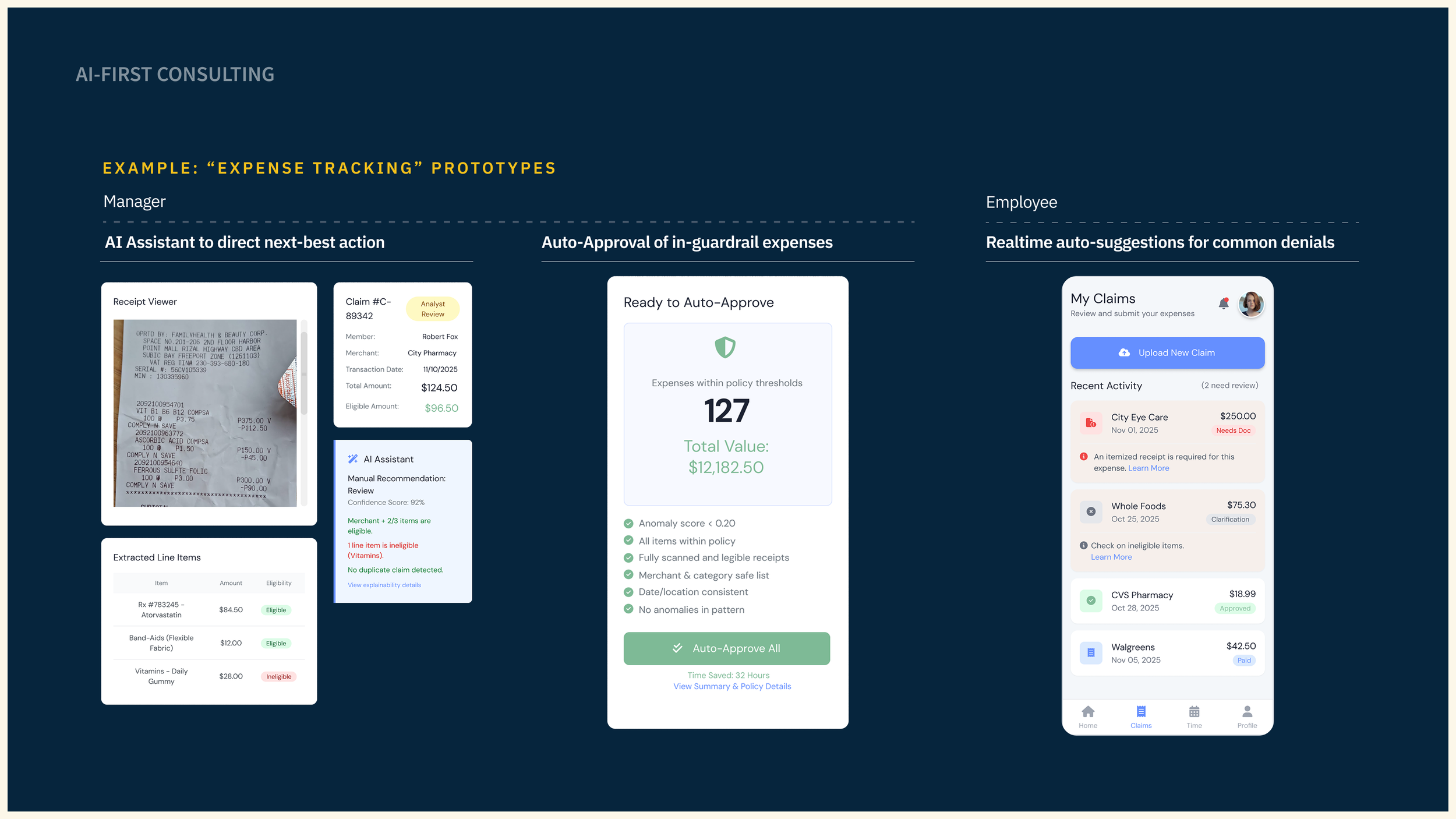

If you want a strong, fast path to ROI, you should bias toward work that is:

Routine / High volume / High friction - We'll surface the most repetitive, rules-based tasks where an AI agent can reliably take work off your team's plate.

Ready with Clean Data - We'll evaluate how accessible, clean, and complete your data is, so we can target only the use cases an AI agent can actually support.

The Right Level of Decision Complexity - We'll unpack the types of decisions involved in each workflow to determine what an AI agent can handle versus what needs human judgment.

If you’re looking to redesign work, you can ask:

“If we were designing this function from scratch today, would we build this step at all?”

That’s how you find the work that shouldn’t exist—and where agentic systems can dive a big impact.

How to Tie “AI Ideation” to Scorecards

When stakeholders ask, “How can we improve our key metrics on Product X using AI?” I keep it in a tight five-part sequence:

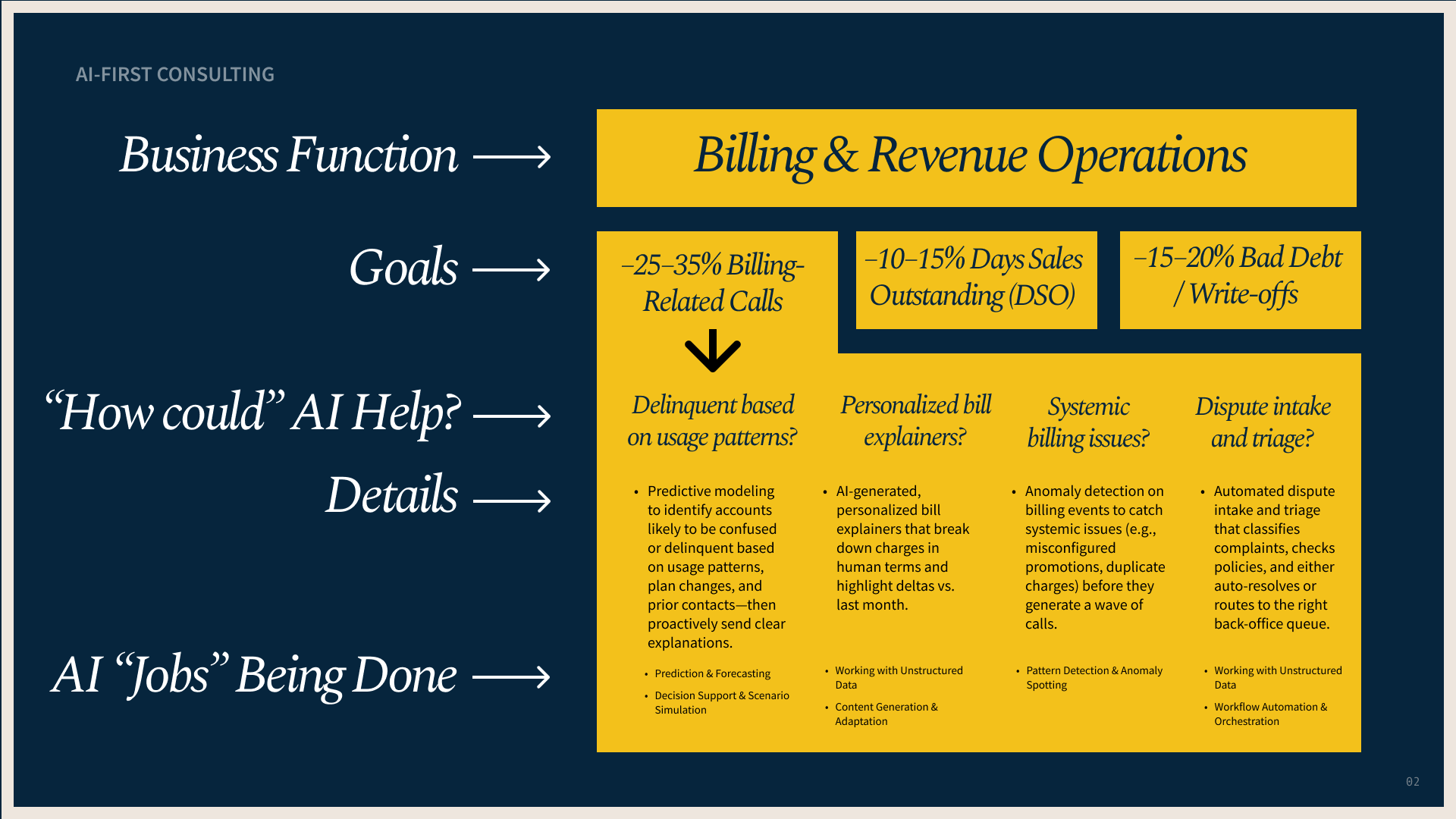

Name the business function + product area

Example: Billing & Revenue Operations.

Anchor on the scorecard

Example: Reduce billing-related calls by 25–35%.

Brainstorm in the form of metric levers

Not “features,” but mechanisms:

flag likely confusion or delinquency based on usage patterns

generate personalized bill explainers at the moment of truth

detect systemic billing issues before call spikes

streamline dispute intake and triage

Write a single epic statement

Example:

“Predict accounts likely to be confused or delinquent based on usage patterns, plan changes, and prior contacts, then proactively deliver clear explanations and next steps, and route exceptions to the right queue.”

Tag the AI jobs being used

Example: Prediction & forecasting + decision support + explanation generation.

This forces clarity: what are we changing, which metrics are moving, and which capability is doing the work?

The Real Value: Redesigning Work

Here’s what I emphasize when I’m consulting: you cannot responsibly redesign workflows you don’t understand.

So the most durable path looks like:

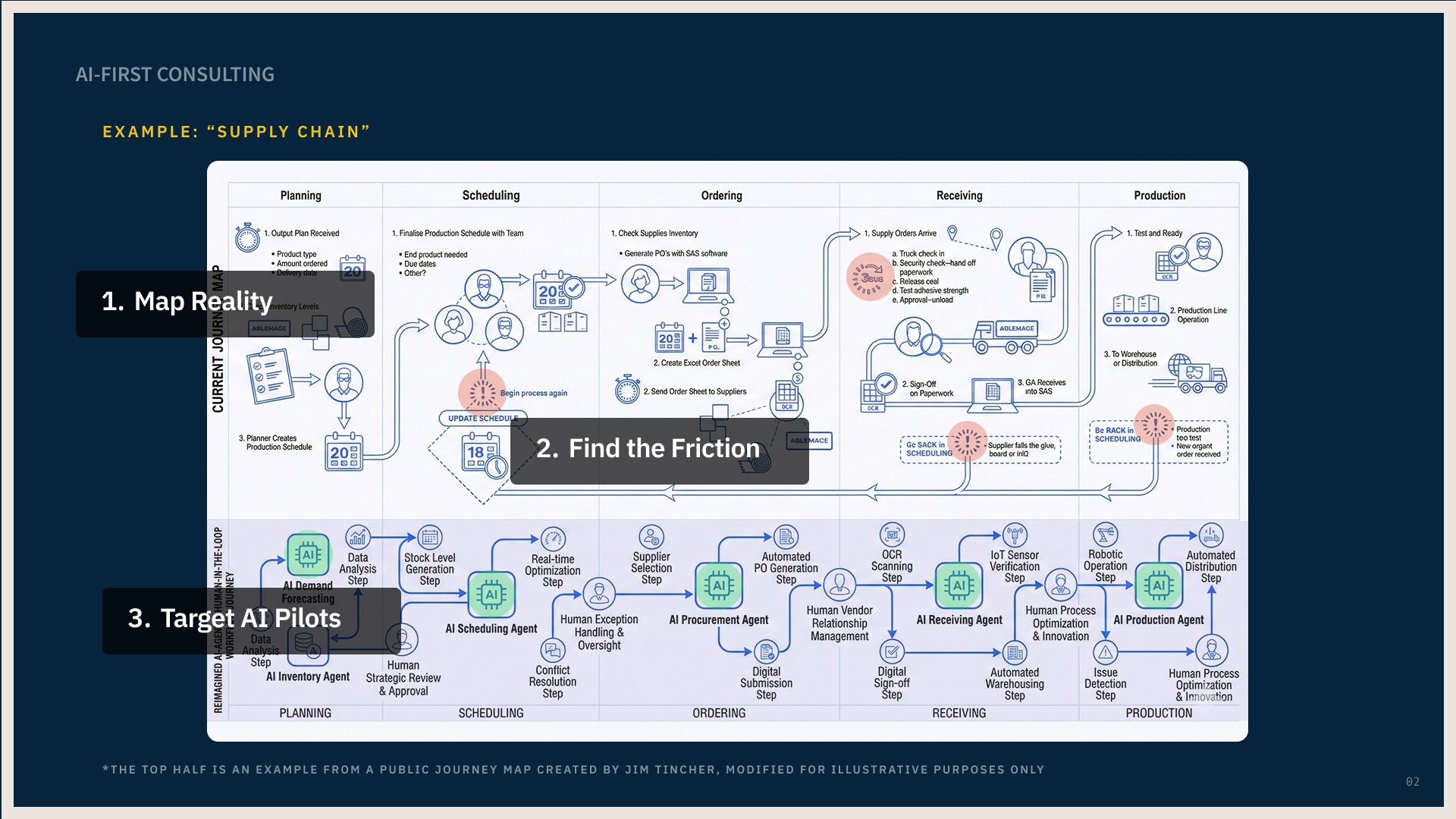

Map Reality: Map the real journey end-to-end: tasks, handoffs, tools, exceptions, and pain points—how it truly happens, not how the deck says it should.

Find the Friction: Identify bottlenecks, rework loops, swivel-chair moments, status-chasing, and repetitive steps that create delays or inconsistency.

Target AI Pilots: Identify specific moments where AI can safely assist—supporting decisions, handling routine work, or orchestrating steps across systems and channels—with clear human review points.

Prioritize Impact: Align opportunities to business goals, data readiness, and implementation effort so you end with a focused roadmap of high-impact pilots, not a wish list.

Because at the end of the day, it’s hard to redesign work you don’t truly understand.

A Practical Starting Point: The 90-Minute “Workshop-in-a-Box”

If you want to answer “Where can AI matter across our business?” without months of analysis, run this session with a cross-functional team (product, ops, CX, engineering, data, and a decision-maker).

Outcome: 3–5 prioritized opportunities + validation plans.

0:00–0:10 — Set the frame

Confirm which executive question you’re answering

Define success (“3–5 opportunities we can validate in two weeks”)

Output: scope + success statement

0:10–0:20 — AI job primer

Review the “jobs” list

Add 1–2 examples from your domain

Output: shared language

0:20–0:40 — Map Reality Lite

Brainstorm a list and choose one high-volume workflow

Map trigger → steps → tools → handoffs → exceptions → outcomes

Output: lightweight workflow map

0:40–0:55 — Find the Friction

Tag hotspots: rework, swivel-chair, exception clusters, knowledge hunting

Output: 5–8 friction hotspots

0:55–1:15 — Target AI pilots

For each hotspot: pick an AI job, define assist vs automate, identify human-in-the-loop points

Output: 3–5 pilot concepts (one sentence each)

1:15–1:25 — Feasibility + data readiness filter

Score each pilot: data readiness, integration complexity, risk, effort, measurability

Output: ranked shortlist

1:25–1:30 — Next steps

Assign owners + a two-week validation plan (prototype type + success metrics)

Output: action plan

Something to watch out for:

When I’m working with stakeholders, the first question (“where could this matter?”) is the doorway to everything else. It’s also where many organizations can often waste time:

They brainstorm 50 use cases.

They pick the flashiest ones.

They ignore data readiness and operational constraints.

They ship a pilot that can’t scale, can’t be trusted, or can’t be measured.

A better approach is to run a short, structured session that produces a prioritized shortlist of agent opportunities that are both valuable and feasible, along with a path to quickly validate them.

Closing: Where the ROI Actually Lives

When I’m consulting at geniant, the most productive stakeholder conversations happen when we stop asking, “Where can AI fit?” and start asking:

Which work should disappear—and what new workflow replaces it?

That shift turns AI agents from a novelty into an operating model change.

And that’s where the ROI lives.